How to build AI agent with generative AI is one question that opens the door to a new era of intelligent, self-directed systems. Instead of simple chatbots, modern AI agents can understand context, make decisions, and complete tasks with surprising autonomy.

In 2025, approximately 78% of companies in the US1 reported using AI in at least one business function, and the adoption of generative AI continues to rise across industries. This quick shift shows how quickly autonomous AI assistants are moving from experimentation to everyday business tools.

In this article, we will break down what an autonomous AI agent is, how generative models power it, and the exact steps to build one. You will learn the core components, recommended frameworks, real use cases, and best practices to create a reliable AI agent of your own.

Key Takeaways

- Autonomous AI agents go beyond chatbots by using memory, reasoning, and tools to plan and complete multi-step tasks independently.

- Different types of agents require different tech stacks, from knowledge assistants to full autonomous systems with monitoring and guardrails.

- A strong memory system is essential, combining short-term, long-term, and episodic memory to make the agent smarter and more reliable.

- Safety and governance must match the risk level of the agent, with stricter controls for finance, healthcare, and automation-heavy workflows.

- Companies can choose between managed, self-hosted, or hybrid deployment depending on their needs for speed, privacy, control, and compliance.

What Is an Autonomous AI Agent?

When learning how to build an AI agent with generative AI, it helps to first understand what an autonomous agent actually is. An autonomous AI agent is an intelligent system that can take a goal, decide the steps required, and execute those steps with minimal human supervision.

In practical terms, an autonomous AI assistant functions like a digital teammate. You give it an outcome, and it works independently to achieve it. This can include researching information, summarizing content, retrieving data from APIs, managing tasks, or coordinating multi-step workflows across different tools.

To operate with real autonomy, every agent relies on a set of core building blocks. These components also form the foundation of any strong AI agent framework:

- Generative model: This is the brain of the agent. It handles reasoning, problem-solving, language understanding, and multi-step planning.

- Memory system: Stores context, previous actions, user preferences, retrieved knowledge, and task history, helping the agent stay consistent across steps.

- Tools, APIs, and external actions: Allow the agent to interact with the world beyond text. This can include web search, database queries, email actions, workflow automation tools, or business applications.

- Planning and action loop: The agent observes the result of each action, decides the next step, corrects itself when needed, and continues until the goal is achieved.

Together, these elements give autonomous AI agents the ability to think, act, and self-correct, enabling practical real-world use cases that save time and enhance productivity.

Recommended Read: How to AI-Proof Your Career in 2025

How Generative AI Powers Autonomous AI Assistants?

Understanding how to build an AI agent with generative AI starts with something simple: autonomy doesn’t come from rules. It comes from intelligence. Traditional workflows rely on predefined logic, but generative models give AI assistants the ability to think, reason, and adapt the way a human teammate would.

At the core of every autonomous system is a language model that can analyze information, interpret intent, and make decisions under uncertainty. This foundation helps an agent understand messy instructions and choose the next best step without constant guidance.

Generative AI makes this possible by enabling capabilities such as:

- Task decomposition: It can take a large, ambiguous goal and break it into smaller steps that the agent can act on.

- Contextual reasoning: The model understands nuance in language, past interactions, and user preferences to make better decisions.

- Adaptive planning: When something changes, the agent can update its plan rather than starting from scratch.

- Tool selection and execution: Based on reasoning, it picks the right tool or API, uses it, and interprets the output.

- Self-correction: The agent can evaluate its own results, spot errors, and adjust the next action automatically.

Together, these abilities turn a simple instruction into consistent, real-world outcomes.

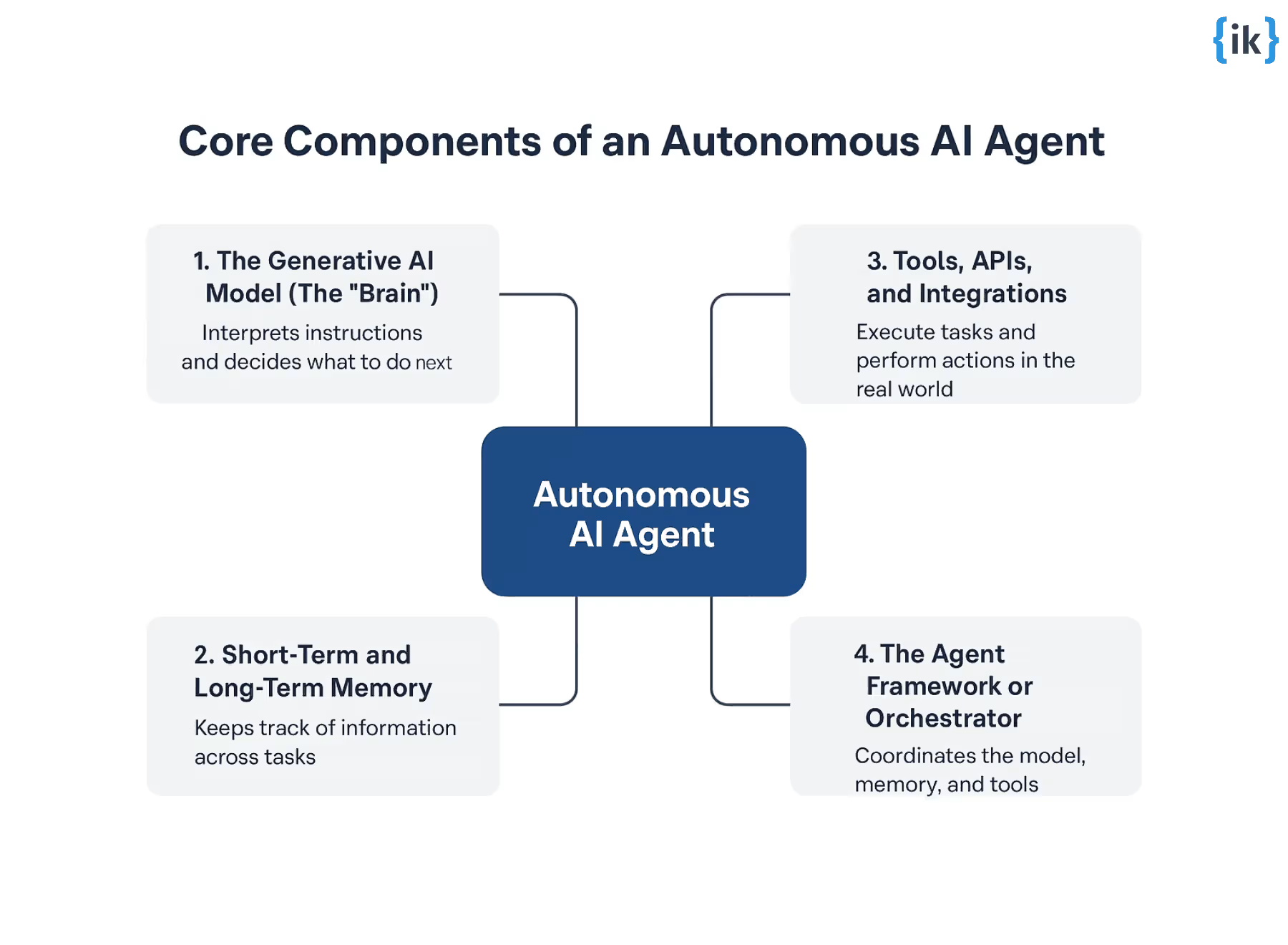

Core Components of an Autonomous AI Agent

If you want to understand how to build an AI agent with generative AI, you first need to know what actually makes an autonomous AI assistant work. Behind every smooth-running agent is a set of core building blocks that allow it to reason, plan, take action, and improve over time.

At a high level, an autonomous agent is made of four layers that work together: the brain, the memory, the tools, and the orchestration logic. Each plays a different role, but all are essential.

Here’s a breakdown of the key components:

- The generative AI model: This is the reasoning engine. It interprets instructions, breaks tasks down, and decides what to do next. This is where intelligence and autonomy truly originate.

- Short-term and long-term memory: Memory helps the agent stay context-aware across tasks.

- Short-term memory keeps track of the current conversation, actions, and intermediate results.

- Long-term memory stores facts, preferences, or past interactions so the agent becomes more useful over time.

- Tools, APIs, and integrations: Generative models reason, but tools execute. Agents rely on search APIs, databases, workflows, software actions, code interpreters, and more to complete real-world tasks.

- The agent framework or orchestrator: This is the operational layer that coordinates everything. It decides when the model should think, when the agent should retrieve memory, which tool to call, how to validate output, and how to move toward the final goal.

- Safety, guardrails, and error handling: A reliable autonomous AI assistant must detect failures, retry intelligently, avoid harmful actions, and know when to ask for clarification or escalate. When combined, these pieces let an agent understand a task, plan, act, and deliver results with minimal help.

How AI Agents Use Planning, Reasoning, and Tools

Understanding how to build an AI agent with generative AI also means understanding how agents actually think. Modern autonomous AI assistants don’t simply execute commands; they run a continuous cycle of interpreting goals, breaking them down, choosing tools, checking their work, and adjusting based on results.

This loop is the core of every reliable AI agent framework and is what allows agents to behave more like capable teammates rather than rule-based bots.

Here’s a simple, builder-friendly table showing how planning, reasoning, and tools create real autonomy.

| Stage | What the Agent Does | Why It Matters |

| 1. Understand Goal | Interprets the instruction, infers missing context | Ensures the agent knows what done looks like |

| 2. Decompose Task | Breaks the goal into smaller, logical subtasks | Gives structure and enables multi-step execution |

| 3. Plan & Prioritize | Determines order, dependencies, and possible paths | Helps the agent stay consistent and reduce errors |

| 4. Select Tools | Chooses APIs, databases, code interpreters, or workflows | Connects reasoning with real-world action |

| 5. Execute Actions | Runs the tool, processes results, checks for errors | Moves the task forward with minimal human input |

| 6. Self-Correct | Fixes mistakes, retries steps, updates the plan | Allows autonomy even when outcomes are unpredictable |

| 7. Deliver Output | Summarizes, formats, or executes the final result | Ensures clarity and usability for the end user |

Recommended Read: Top AI Skills to Future-Proof Your Tech Career

Types of AI Agents You Can Build with Generative AI

To fully understand how to build an AI agent with generative AI, it helps to know the different categories of agents you can create because not all agents think, plan, or behave the same way. Each type solves a different class of problems, and choosing the right structure determines how autonomous, reliable, and scalable your solution will be.

Here’s a breakdown of the main AI agent types, when to use them, and how they fit into your workflow.

1. Reactive Agents

Reactive agents respond instantly based only on the current input. They don’t plan, store memory, or evaluate past actions.

Best for:

- Real-time chat responses

- Simple customer support

- FAQ-style interactions

- Fast classification tasks

Why they matter: They’re extremely fast and cheap to run, ideal when you don’t need full autonomy.

2. Goal-Based Agents

This is the classic form of an autonomous AI assistant. Goal-based agents take a high-level instruction and figure out how to achieve it using reasoning and planning. Capabilities of these agents are:

- Task decomposition

- Tool selection

- Multi-step execution

- Self-correction

- Progress monitoring

These agents are best for:

- Research tasks

- Workflow automation

- Content creation

- Data analysis

- Email or calendar management

Why they matter: This is the structure behind most modern AI agents used in startups and enterprises.

3. Tool-Based Agents

These agents are built around a suite of tools, APIs, or plugins. They excel at:

- Running code

- Performing web searches

- Querying databases

- Triggering automations

- Operating software

Example: An agent that monitors your inventory, generates purchase orders, and updates your ERP system.

Why they matter: They connect generative reasoning with real-world execution.

4. Memory-Centric Agents

These agents combine reasoning with short-term and long-term memory, allowing them to learn about:

- User preferences

- Past tasks

- Patterns and workflows

- Company-specific knowledge

These agents are best for:

- AI executive assistants

- CRM and sales agents

- Knowledge retrieval agents

- Personalized coaching systems

5. Multi-Agent Systems

This is the next evolution of AI agents. Instead of one agent doing everything, you create multiple specialized agents that collaborate.

Examples of such agents are:

- A research agent, analysis Agent, and writer agent.

- A data agent, an automation agent, and a reviewer agent

Benefits of using these agents are:

- Higher accuracy

- Faster execution

- Reduced error rates

- More modular architecture

Why they matter: Teams of agents outperform single general-purpose agents, especially in high-stakes enterprise use cases.

6. Enterprise Workflow Agents

These agents don’t just perform tasks; they own entire business processes.

Use cases of these agents are:

- Lead qualification and routing

- Automated reporting

- Customer onboarding

- HR operations

- Fraud checks

- Document processing

Why they matter: They unlock real ROI by replacing manual multi-step workflows.

7. Code-Generating & Self-Improving Agents

A growing class of agents that can:

- Debug their own code

- Write scripts or microservices

- Run and verify execution

- Improve their logic over time

These agents are best for:

- Technical teams

- Engineering automation

- Orchestrating backend workflows

Why they matter: They represent the future of autonomous software development.

Choosing the right type of agent is step zero in mastering how to build an AI agent with generative AI.

It determines:

- The level of autonomy

- Tool complexity

- Memory architecture

- Deployment stack

- Scalability and cost

- How users interact with the system

With the right structure, you move from a simple chatbot to a reliable, intelligent autonomous AI assistant that delivers real value.

Step-by-Step: How to Build an AI Agent with Generative AI

Building a real autonomous agent is very different from building a chatbot. When you truly understand how to build an AI agent with generative AI, you realize it involves aligning reasoning, memory, actions, and guardrails so the system can operate with intelligence and independence.

Below is a step-by-step process that organizations have used in 2025.

1. Define the Agent’s Purpose, Boundaries, and Success Criteria

The biggest mistake teams make is jumping straight into coding. High-performing autonomous agents start with clarity.

Clearly define:

- What problem does the agent solve

- The types of tasks it will handle

- The context in which it operates (support, research, operations, automation)

- Task success metrics (speed, accuracy, autonomy level, cost per task)

- Escalation rules for handing off to humans

2. Select the Right Generative Model for the Job

Every model behaves differently. Choosing the wrong one limits your agent from day one.

During model selection, consider:

- Reasoning depth: Multi-step complexity, planning

- Latency: Real-time actions vs. background tasks

- Cost: Tokens per day vs. enterprise scale

- Safety: Regulated industries may favor more conservative models.

- Integration: Which environments or toolkits does the model support?

A few picks in 2025:

- GPT-5.1 / o1: Advanced reasoning, agentic operations

- Claude 3.5 Sonnet: High reliability, low hallucination rate

- Llama 3.2 70B: Self-hosted for sensitive data workloads

- Mistral Large: Strong balance: performance and cost

Choose an AI model intentionally because the core model is your agent’s brain.

3. Build a Real Memory Architecture

Memory is the difference between a chatbot and an autonomous agent.

Memory is what distinguishes an autonomous agent from a regular chatbot, enabling it to reason, plan, and improve over time. Autonomous agents use different types of memory depending on the task and duration of information storage.

- Short-term memory: Used for active reasoning, step-by-step planning, and storing temporary inputs, outputs, and tool results within the current session.

- Long-term memory: Stores persistent data like user preferences, policies, historical decisions, and reusable knowledge to maintain continuity across sessions.

- Episodic memory: Captures session histories, reflections, failures, and improvement patterns to help the agent learn and evolve over time.

Tip: Memory should grow over time. Real agents evolve.

4. Integrate the Tools and Actions Layer

This is where your agent stops being theoretical and starts achieving real outcomes.

Common tool categories are:

- Knowledge tools: web search, browsing, RAG

- Business tools: CRMs, ERPs, HRIS, ticketing systems

- Execution tools: Python sandbox, SQL executor, automation platforms (Zapier, Make, n8n)

- Communication tools: email, Slack, Teams, SMS

- Data tools: spreadsheets, spreadsheets, cloud storage

Your agent becomes as capable as the tools you connect.

5. Implement an Orchestration and Planning Engine

This is the system’s executive function, controlling the agent’s thinking and actions. A strong orchestrator supports:

- Task decomposition

- Action planning

- Branching logic

- Retry logic and reflection

- Tool choice reasoning

- Error catching and rerouting

- Multi-step workflows

- Monitoring model responses

The best autonomous agents combine LLM reasoning with deterministic orchestration.

6. Add Guardrails, Policies, and Do Not Cross Boundaries

Guardrails protect the user, the business, and the agent itself.

Include controls for:

- Data access permissions

- Content filtering

- Preventing unsafe actions

- Restricting tool misuse

- Hallucination pattern detection

- Input validation

- Output safety checks

- Handling sensitive or regulated queries

Think of guardrails as the agent’s conscience and rulebook.

7. Test Against Realistic Scenarios

You must test beyond happy paths. Include test cases with:

- Ambiguity

- Missing data

- Conflicting instructions

- Slow or failing APIs

- Unexpected user inputs

- Adversarial phrasing

- Stress loads

- Multi-intent queries

This step shows you what breaks before your users find it.

8. Deploy, Monitor, and Continuously Improve

Deployment isn’t just pushing the agent live. It’s lifecycle management.

After deployment, you should:

- Track performance metrics

- Log tool calls and failures

- Analyze user sentiment

- Monitor latency and cost spikes

- Add new tools as needs grow

- Retrain or fine-tune on real data

- Expand memory as the agent learns

- Introduce new capabilities gradually

Great agents never stay static. They evolve as your users evolve.

How to Choose the Right Tech Stack for Building an AI Agent?

Selecting the right tech stack is one of the most strategic decisions when planning how to build an AI agent with generative AI. A strong stack isn’t just tools; it’s a set of decisions that aligns capability with business reality.

Here are some tips on how to choose the right components, based on use case, autonomy level, cost, and deployment environment.

1. Start With the Agent Type You’re Building

Different autonomous agents need different stacks. Define your category first. The categories include:

- Knowledge agents: These agents are used for research, Q&A, and internal knowledge tasks and need fast retrieval, rich context handling, and strong indexing.

- Task automation agents: Handle tasks like email processing, CRM updates, and operations. They require reliable tools, error handling, and robust workflow orchestration.

- Multi-step reasoning agents: These agents need deep reasoning, memory retention, and extended thought chains. Key stack elements are memory systems, reflection loops, and planning modules.

- Full autonomous agents: Take end-to-end ownership of workflows. They require monitoring, guardrails, and safe autonomy.

Knowing your agent type shapes every technical choice that follows.

2. Choose a Memory Strategy

Previously, we discussed memory types; here, we focus on how to pick the right memory architecture. The following is the memory selection criteria:

- Latency needs: in-memory vs. cloud-based

- Volume of knowledge: MB? GB? TB?

- Update patterns: static docs vs. dynamic enterprise data

- Privacy: internal vs. public data

- Personalization: per-user memory vs. global memory

Examples of memory strategies by agent type:

- Knowledge agent → high-recall vector DB + metadata filters

- Automation agent → lightweight key-value memory

- Enterprise agent → hybrid: vector DB + relational DB

- Customer support agent → per-session episodic memory

Memory architecture defines your agent’s intelligence.

3. Select an Orchestration Approach

This is the real differentiator. Rather than naming frameworks, this explains how to decide which orchestration method fits your agent.

- Single-Model Orchestration: For lightweight agents that only need sequential reasoning.

- Pros: Simple. Cheap. Easy to maintain.

- Cons: Limited autonomy.

- LLM & Deterministic Logic Hybrid: Best for enterprise workflows.

- Pros: Predictable, safe, auditable.

- Cons: Requires engineering overhead.

- Multi-Agent Collaboration: Different roles work together.

- Pros: High-quality output, modular thinking.

- Cons: More computing, more moving parts.

- Tool-First Orchestration: LLM acts mainly as a controller, calling specific tools.

- Pros: Reliable, low hallucination.

- Cons: Requires strong APIs.

This choice impacts cost, speed, intelligence, and autonomy.

4. Decide If You Need Managed vs. Self-Hosted Infrastructure

This is where businesses often overspend or overcomplicate.

| Type | Best For | Strengths | Limitations |

| Managed | Speed, simplicity, maintenance-free scaling | Always updated, safe defaults, easy tool integration | Limited control, compliance constraints for certain industries |

| Self-Hosted | Privacy-sensitive industries, large-scale internal agents | Full control, lower long-term cost at scale | Requires ML ops maturity, hardware management |

| Hybrid | Enterprises using a mix of public and internal data | Flexible, balanced control | Integration complexity |

5. Build a Tooling Ecosystem That Matches the Agent’s Autonomy Level

Instead of re-listing tools, this explains how to pick them.

Key questions to ask:

- Does the tool support API-level control?

- Will the agent ever need to chain actions across tools?

- What’s the failure tolerance?

- How will authentication work?

- Do you need logging & audit trails for compliance?

You’re not just selecting tools, you’re selecting the actions your agent can perform.

6. Embed Safety and Governance Based on Risk Profile

AI agents should have safety measures and governance protocols tailored to their potential impact and the risks they pose. The level of oversight varies depending on the agent’s role and the sensitivity of its tasks.

- Low-risk agents: These agents handle tasks where mistakes have minimal consequences, such as creative brainstorming tools.

- Medium-risk agents: Medium-risk agents interact with customers or manage operational processes, where errors could have moderate consequences.

- High-risk agents: High-risk agents operate in critical domains like finance, healthcare, or enterprise automation, where mistakes can have serious legal, financial, or safety repercussions.

7. Plan for Observability, Logging, and Monitoring

A mature autonomous agent requires visibility into:

- Tool failures

- Unexpected reasoning patterns

- Memory retrieval issues

- Cost spikes

- Latency trends

- User satisfaction

- Hallucination frequency

- Task success score

This is where real-world reliability comes from, and it was not covered earlier.

Key Challenges, Risks, and Guardrails When Building Autonomous AI Agents

Even when you understand how to build an AI agent with generative AI, the real difficulty lies in managing the risks that come with autonomy. As agents gain the ability to reason, plan, and take actions across systems, the margin for error increases dramatically.

Here are the most critical challenges and the guardrails every team must put in place before deploying an autonomous AI assistant in production.

Challenge 1: Reasoning Errors and Hallucinations

Generative models can produce incorrect or fabricated information, especially when:

- Tasks require multi-step reasoning

- Data is ambiguous or missing

- The agent needs domain-specific expertise

- External tools return unclear results

Guardrails:

- Use constrained prompting and structured task formats

- Add validation steps for critical outputs

- Implement fallback responses when confidence is low

Challenge 2: Uncontrolled Action Execution

Autonomous AI agents can trigger workflows or APIs incorrectly, leading to real-world consequences such as:

- Wrong data updates

- Accidental email sends

- Faulty API calls

- Misuse of tools

Guardrails:

- Add human-in-the-loop approval for high-risk actions

- Enforce strict permission scopes

- Build “allowed tools only” execution policies

Challenge 3: Memory Misuse or Drift

Memory enables personalization and long-term autonomy, but it also introduces risks:

- Storing unnecessary or sensitive data

- Using outdated memory for decisions

- Overgeneralizing from past interactions

Guardrails:

- Define strict memory write rules

- Auto-expire outdated entries

- Use metadata tagging for controlled retrieval

Challenge 4: Ambiguous Objectives and Over-Autonomy

If the agent does not fully understand the task, it might improvise, sometimes too creatively. This is risky in enterprise settings.

Guardrails:

- Use explicit task boundaries

- Add step-by-step decomposition requirements

- Include stop conditions and success criteria

Challenge 5: Security, Privacy, and Compliance Risks

Autonomous agents interact with sensitive systems and datasets, which makes them potential attack vectors.

Guardrails:

- Enforce access control and least-privilege design

- Sanitize all model inputs

- Implement audit logging for every action

- Use encrypted storage for memory systems

Challenge 6: Reliability and Reproducibility

Generative AI is probabilistic, so results can vary between runs even with the same prompt. This becomes an issue for:

- Financial workflows

- Customer operations

- Regulated domains

Guardrails:

- Use deterministic settings where possible

- Enforce step logs to reproduce reasoning

- Add agent-level retry logic with constraints

Conclusion

Learning how to build an AI agent with generative AI is no longer just a technical skill; it’s a strategic advantage for anyone preparing for the future of work. As autonomous agents become more capable, more reliable, and more deeply integrated into enterprise systems, teams that understand how to design, evaluate, and deploy them will lead the next wave of innovation.

Looking ahead to 2026, we’ll see AI agents evolve toward richer long-term memory, stronger multimodal reasoning, cross-tool orchestration, and fully autonomous workflows that span entire business functions. Agents will shift from being assistants to becoming true operational partners, capable of handling complex decision-making with human oversight.

If you want to accelerate your career in this direction, the Agentic AI Career Boost program by Interview Kickstart is one of the most comprehensive ways to gain hands-on mastery:

As the agentic revolution unfolds, those who build the right skills today will be the ones shaping the AI-powered organizations of tomorrow.

FAQs: How to Build AI Agent with Generative AI

Q1. What is an autonomous AI assistant, and how does it differ from a chatbot?

An autonomous AI assistant is built to take a high-level goal, break it down, reason, plan, act via tools/APIs, and self-correct, all with minimal human oversight. A chatbot mostly just reacts to user prompts without long-term memory, planning, or real-world actions.

Q2. Which components are essential when building an AI agent framework?

You need at least four core components: a generative model, a memory system, tools/APIs for external actions, and an orchestration layer that plans, executes, and monitors tasks.

Q3. When should I use short-term memory vs long-term memory in an AI agent?

Use short-term memory for current-session reasoning, step-by-step planning, and temporary data. Use long-term memory to store persistent info like user preferences, policies, knowledge, or past tasks, so the agent stays useful across sessions.

Q4. What kinds of real-world tasks are good use cases for autonomous AI assistants?

Autonomous AI assistants shine at multi-step workflows like research and summarization, data analysis, business process automation, content creation, decision support, and even cross-system automation combining APIs, databases, and user input.

Q5. What are the main risks when building autonomous AI agents, and how can they be mitigated?

Risks include hallucinations, unintended tool or API execution, memory misuse or drift, ambiguity in goals, security/privacy issues, and reproducibility problems. To mitigate, add guardrails, output validation, access control, and fallback or human-in-the-loop mechanisms.